Armageddon or harmony? The ultimate computer game

The need for control of AI is urgent, as unchecked development could lead to significant consequences, including human redundancy. Matt Minshall explores the evolution of AI, the dangers surrounding its misuse, and proposes ways to harness its power without compromising humanity’s future.

Image by Freepik

AI is the latest technological phenomenon that has captured people's attention. The concept is not new but has seen unprecedented acceleration recently. It has great potential for good, but the options for misuse may be greater. The situation has become a computer game with rules that cannot be stopped and that changes faster than the player can respond. There is a dawning realisation that winning may not be an option and that the game is no longer only virtual.

AI should be of great benefit to mankind. However, without proper controls, it will become destructive and easy to exploit – and possibly enable terminal human redundancy. This blog first looks at AI’s evolution and the implications of the word ‘intelligence,’ then offers scenarios of current concerns and possible futures, concluding with ideas for managing against misuse without losing the great potential.

The idea of human-made entities with consciousness and intelligence, along with the dangers of manipulating the natural world, is deeply embedded in ancient tales – though none of these stories end well for humans. The study of logic and formal reasoning evolved into the programmable digital computer, a machine based on abstract mathematical reasoning. Concerns about these dangers are not new, though; they lag behind the pace of development.

The use of ‘intelligence’ for machine learning deserves a thought. Intelligence covers the ability to acquire and understand knowledge. It can also refer to the gathering of general information as well as secrets about an actual or potential opponent. A machine can be programmed to acquire, store, and regurgitate knowledge, but it lacks emotional reasoning and will never comprehend the ‘why’ behind answers. When a student tells a machine to answer an essay question, the response is simply a rote regurgitation of formats and manipulated plagiarising. The recent warning that AI technology has already run out of its primary training resource, human-created data, is ridiculous in concept but a sad prediction. If machines no longer benefit from human creativity, the future will be an increasingly watered-down world of mentally degrading blandness. No machine can replace the emotional intellect that marks humankind as different from other life forms. A machine can reproduce the sound of laughter and explain in words the physiological and psychological components behind it but cannot feel the associated human emotions.

What if humans had electronic brains or robots had human ones? The idea of robots as humanoid hybrids with the frail body parts being replaced by mechanical systems and operated by a human brain or vice versa is no longer a fantasy. The possible evolution of this is exciting and concerning. It can be of great help to assist the ill, but beyond that, it flirts with the concept of Frankenstein’s monster.

The fear of robots replacing humans as a workforce is justified, but not new. From Plato’s concern that writing would “implant forgetfulness in men's souls” to worries that the printing press would degrade the calligraphist, fear of automation replacing humans has persisted – from farming and factory tasks to AI agents. The anxiety of job replacement by automata is universal and growing. The argument that people will be retrained for work and lifestyle has some merit but is already redundant. Elon Musk suggests that humans working in the future will be a choice, but what will they do? For those at retirement age, the world population was around three billion at their birth. It is now around eight billion and rising daily. How will they be fed and occupied? Will humans become mammals to be fed and managed through the sedation of ‘smart’ phone screen images? If they have no role but leisure, the clinical logicality of a machine might conclude that humans are redundant. The possible safeguard is that as machines have evolved to serve humans, without them the machines are themselves redundant.

Global social media has already formed multifarious opinions and predictions in response to Meta’s scrapping of fact-checking. Free speech is highly desirable but a lethal weapon in the hands of the irresponsible.

A human world seeking exclusively the entitlement to rights is the path to anarchy, but when balanced with responsibility, harmony can be achieved. Freedom of speech is embedded in the Universal Declaration of Human Rights, but increasingly ignored is the International Covenant on Civil and Political Rights amendment that these rights carry “special duties and responsibilities,” particularly “for respect of the rights or reputation of others.” If a machine regurgitates a manipulated falsehood damaging to an individual, who is responsible? Is it the machine, the redundant programmer, or the source human?

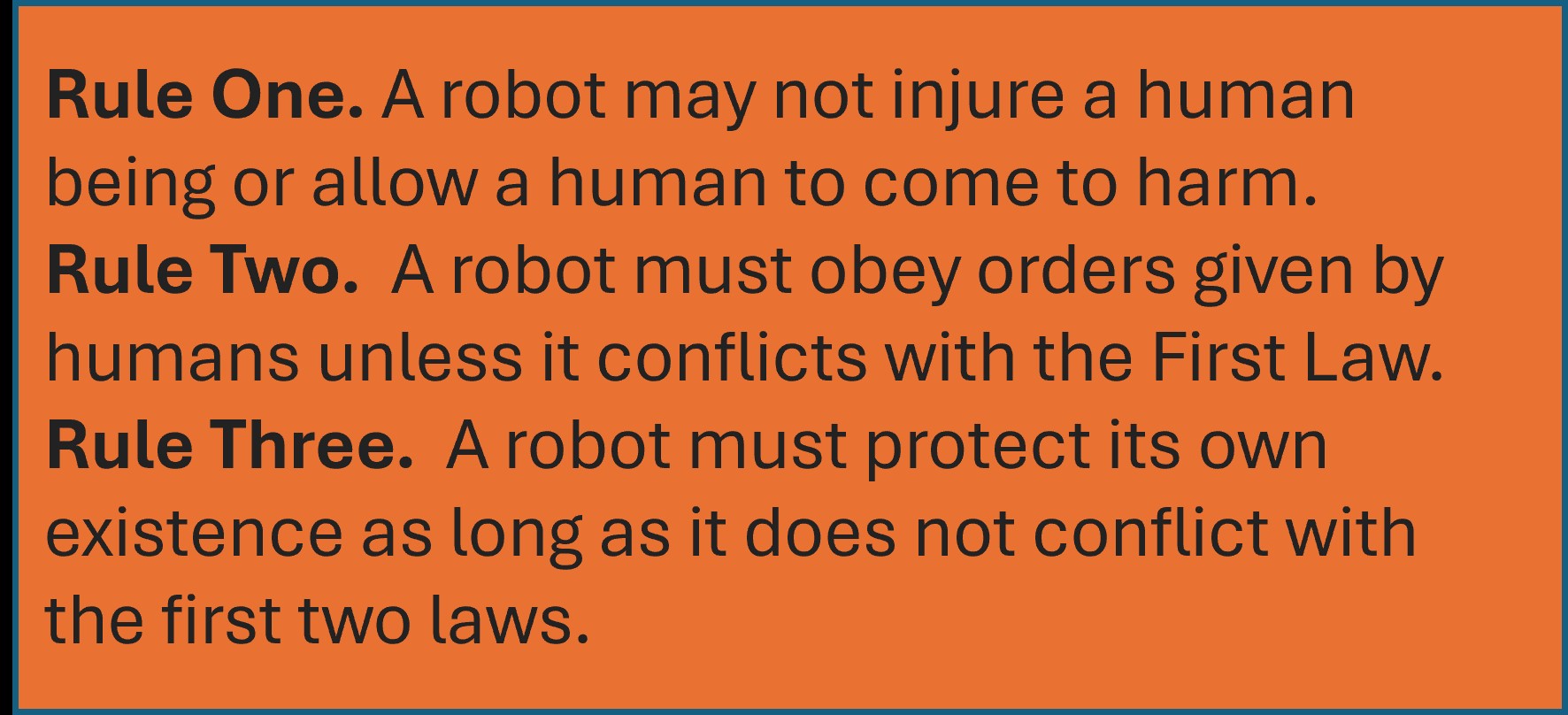

Humans must remain the masters of their destiny. The awareness of the danger of losing control is not new. From antiquity, ideas about artificial men and thinking machines are legion. Thoughts on limiting the danger were formulated in the 1940s by Isaac Asimov and issued in the three laws of robotics in his story Runaround in March 1942.

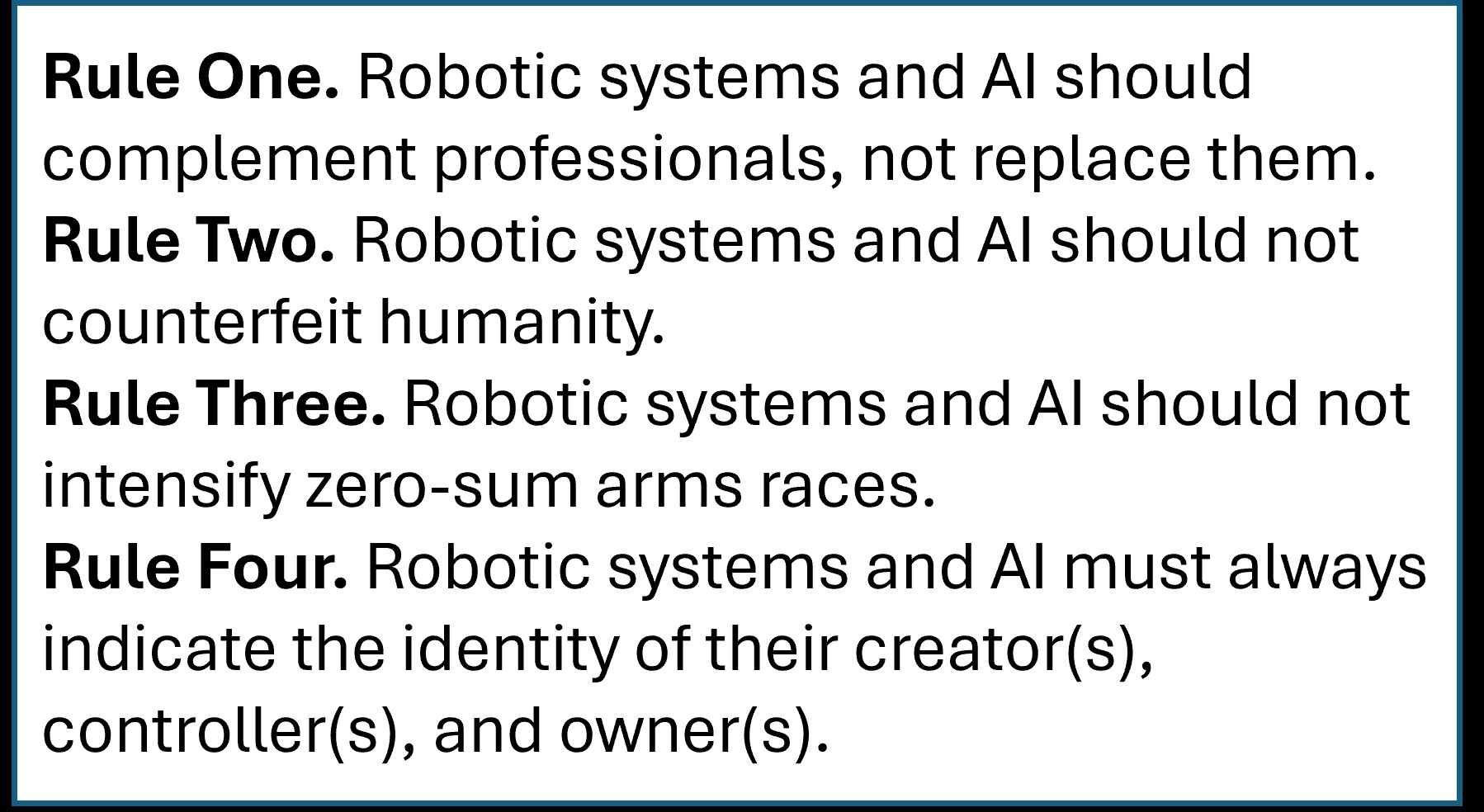

Rather than focus on the legislative tweaks to counter the emergence of each societal threat, the laws of robotics should be formalised with AI, with the key factor that it must not be more than a tool controlled by humans. Professor Frank Pasquale's four new laws propose a strategic update.

Although directed toward the people building robots, rather than the robots, similar to the old laws, they assume a world where legislation is made by rational people free from the evil which occupies the minds of the blinkered and the greedy. A more timely and perhaps effective wording for the new laws is to use ‘may’ for the first and ‘must’ rather than ‘should’ for the second and third.

Otto Barten and Roman Yampolskiy's 2023 article in Time suggests that AI may already be out of control. A reflection from the past is in Goethe’s poem The Sorcerer’s Apprentice, utilised by Neil Lawrence in his thoughts on The Open Society and its AI. In the poem, a young sorcerer learns one of their master’s spells and deploys it to help with his chores, but it gets out of control. The master returns, and order is restored. The answer to whether the apprentices who create AI are still controllable by the human master is the one which may decide the fate of humanity.